The context

A variety of popular scripts are invisibly embedded on many web pages, harvesting a snapshot of your computer’s configuration to build a digital fingerprint that can be used to track you across the web, even if you clear your cookies. It is only a matter of time when these tracking technology takes over the ‘Internet of Things’ we are starting to surround ourselves with. From billboards to books and from cars to coffeemakers, physical computing and smart devices are becoming more ubiquitous than we can fathom. As users of smart devices, the very first click or touch with the smart object, signs-us-up to be tracked online and be another data point in web of ‘Device’ Fingerprinting, with no conspicuous privacy policies and no apparent warnings.

Development process

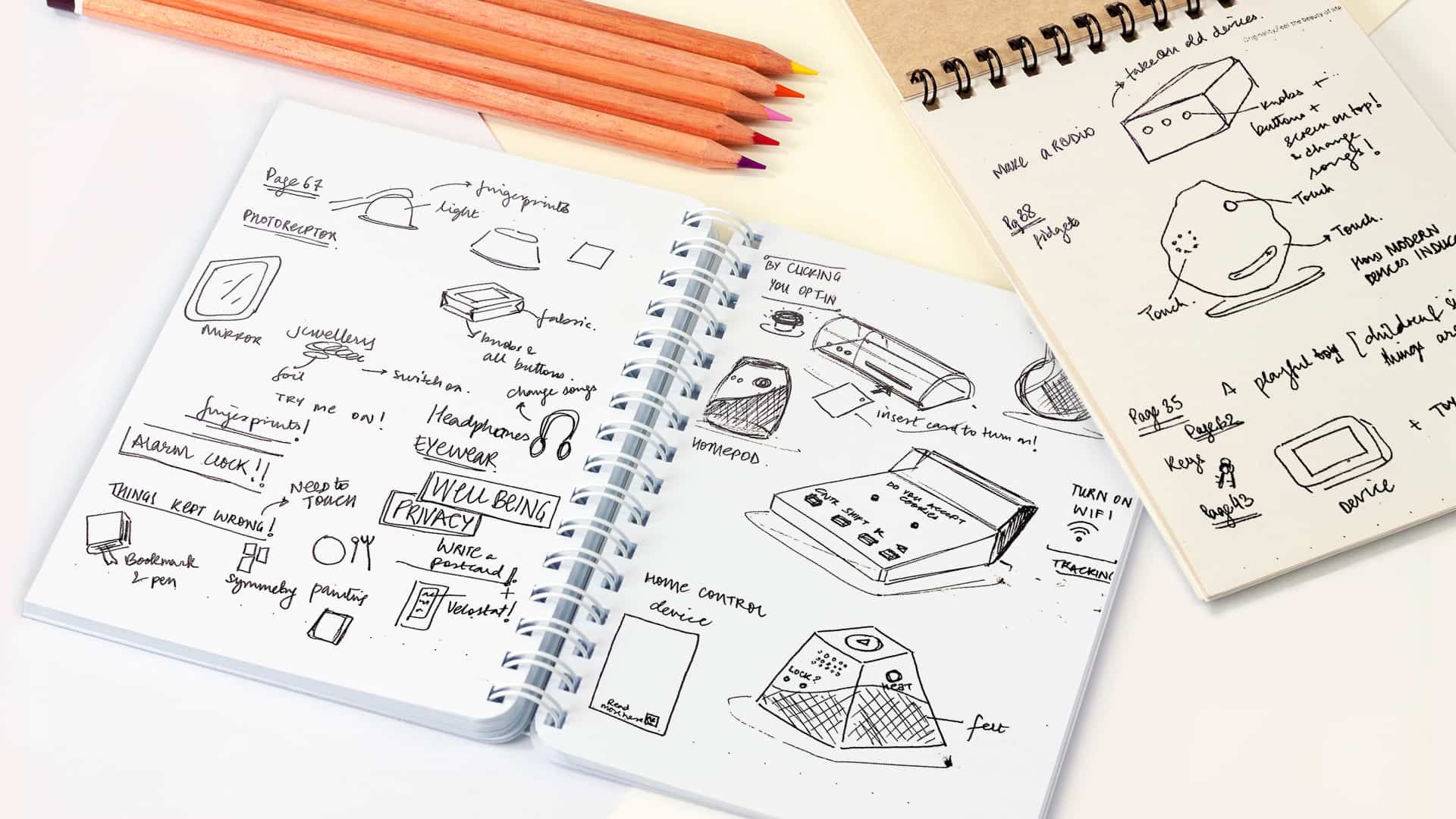

The interactive experience was inspired by the ‘How Might We’ question we raised post our research on Data privacy and we began sketching out the details of the interaction.

- Which interactions we wanted – touch, sound, voice or tapping into user-behaviour.

- What tangible objects we should use – daily objects or a new product which incorporated affordance to interact with or digital products like mobile phones, laptops?

- Which programming platform to use?

- How the setup and user-experience would be?

Technical Approach

Our mentors proposed we look at the ml5 library with p5js; a machine learning library that works with p5js to be able to recognise objects using computer vision. We attempted the YOLO library of ml5 and iterated with the code trying to recognise objects like remotes, mobile phones, pens, or books. The challenge with this particular code was in trying to create the visuals we wanted to accompanied with each object that is recognised, to be able to track multiple interactions and to be able to overlay the video that is being captured with computer vision. It was very exciting for us to use this library as we had to not depend on hardware interactions and we could use a setup with no wires, no visible digital interactions and create a mundane setup which could then bring in the surprise of the tracking visuals aiding the concept.