The Brief

The ask was to investigate how digital tools can be used to create presence, community, and engagement over distance and time. The experiment entailed communication between multiple participants separated by spatial distance in an innovative and meaningful way.

The Process

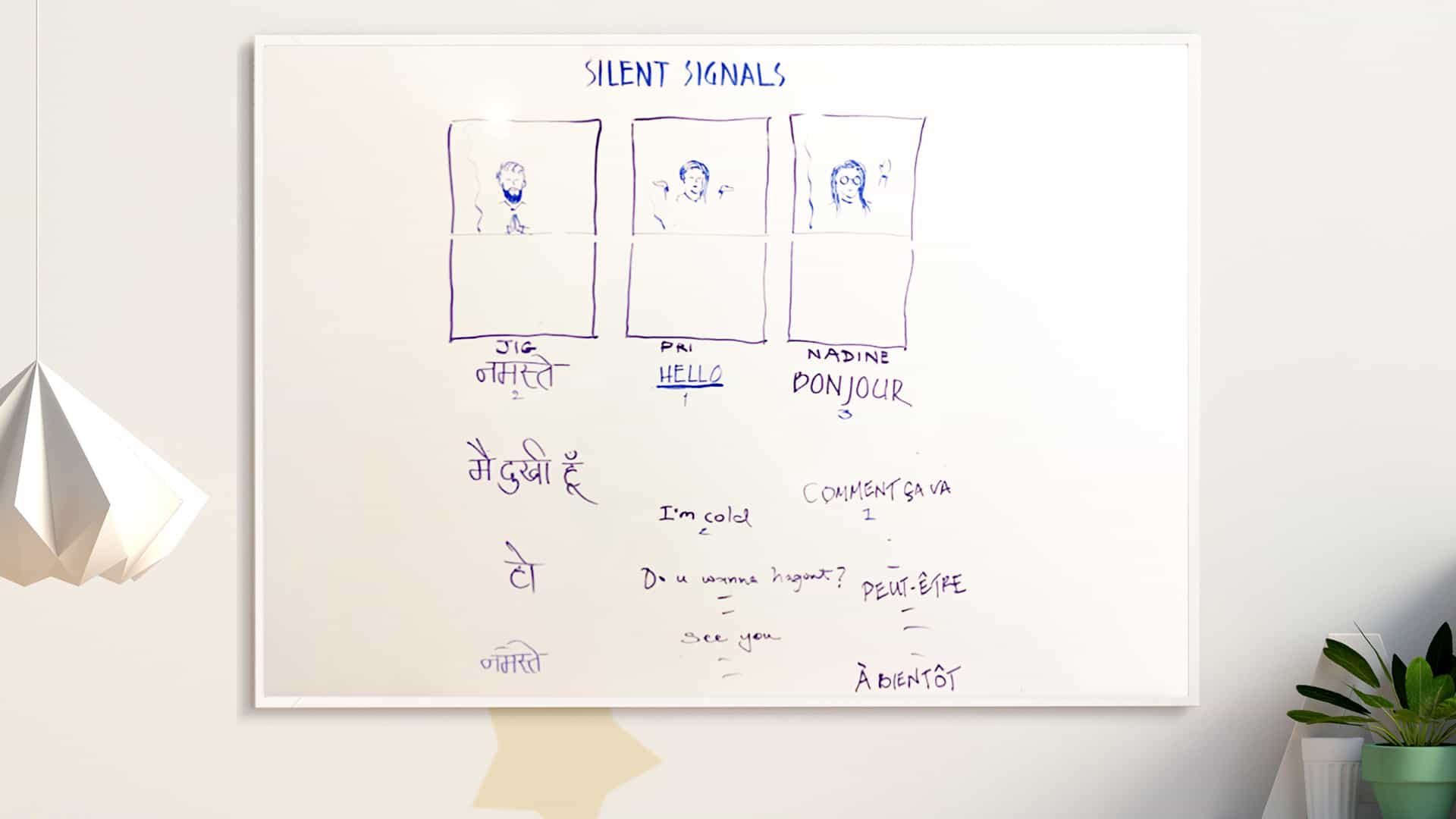

When we first started thinking about communication, we realised that the three of us spoke three different languages: Hindi, French and English. We imagined teams collaborating on projects across international borders, isolated seniors who may only speak one language and globetrotting millennials who forge connections throughout the world. How could we enable them to connect across locations seamlessly, overcoming language barriers?

Our first idea was to build a translation tool that would allow people to text one another in two different languages. This would involve the use of a translation API such as Cloud Translation by Google that allows for automatic language detection through artificial intelligence.

We then thought that it would be more natural and enjoyable for each user to be able to speak their preferred language directly without the intermediary of text. That would require a speech-to-text API and a text-to-speech API. The then-newly released Web Speech API would fit the bill as would the Microsoft Skype Translator API which has the added benefit of direct speech-to-speech translation in some languages, but is unfortunately not available for Hindi.

As we discovered that there are several translation apps already in the market, we decided to push the concept a step further enabling communication without the use of speech and started looking into visual communication. We shared a desire to make our interactions more intuitive and natural.

The Solution

A communication interface built using p5.js that enables users to send messages using simple gestures. It is intended to be a seamless interaction where users’ bodies become controllers and triggers for messages. It does away with the keyboard as an input and takes communication into the physical realm, engaging humans in embodied interactions. It can be utilised by multiple users simultaneously and ignores spatial distance between participants.

The gesture detection framework is based on poseNet technologies, and the experiment uses PubNub to send and receive these messages. The poseNet library is a machine learning model that allows for Real-Time Human Pose Estimation. It tracks 17 nodes on the body using the webcam and creates a skeleton that corresponds to human movements. By using the node information tracked by poseNet, we were able to define the relationship of different body parts to one another, use their relative distances and translate that into code.

My contribution encompassed the original concept development, the visual design of the UI and UX elements, as well as the first workable build of the code. During the debrief phase of the project, I was responsible for the visual aesthetics and creation of the demo video.